Description

Optimize your AI agents by testing prompt performance — automatically and at scale.

Instead of guessing which prompt works better, this smart workflow runs A/B testing on different prompts using Supabase sessions and OpenAI models, helping you measure response quality, consistency, and performance over time.

Whether you're fine-tuning a chatbot or deploying LLMs in production, this workflow lets you experiment like a scientist — without coding or manual tracking.

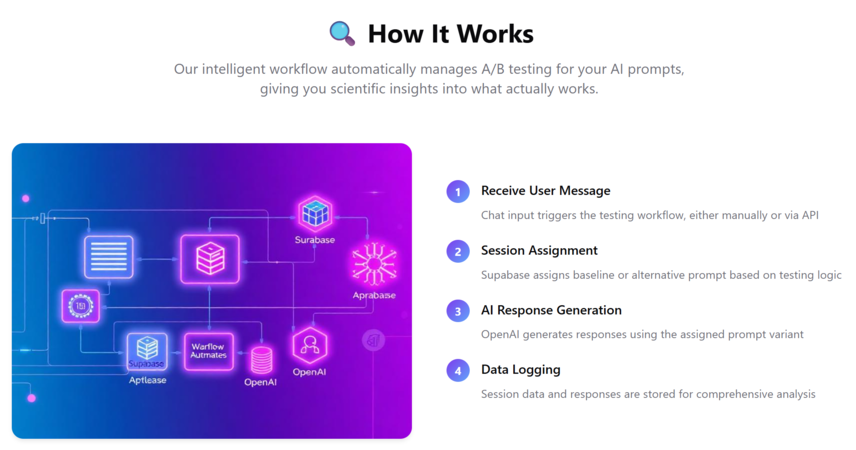

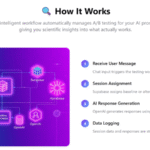

🔍 How It Works:

✅ Receive a user message via chat (manual or API-triggered)

✅ Supabase checks for an existing test session

✅ If no session exists, it assigns either the baseline or alternative prompt

✅ Based on the prompt path, AI generates a response using OpenAI

✅ Session data and prompt path are logged for future analysis

⚙️ Why Use This AI Prompt Testing Flow?

🧠 Prompt Optimization – Compare different versions head-to-head

⚖️ True A/B Logic – Clean separation of test vs control

📊 Session-Aware Routing – Tracks user path across multiple messages

🗃️ Postgres Memory – Persist responses for deeper evaluation

📈 Measurable Performance – Add your own scoring logic easily

🔄 Model Swap Ready – Swap LLMs for multi-model comparison

👥 Who’s It For?

✔️ Prompt Engineers & LLM Developers

✔️ Chatbot Product Teams

✔️ AI Tool Builders

✔️ Growth & Experimentation Engineers

✔️ Researchers in NLP/LLMs

🔌 Works Seamlessly With:

-

OpenAI (response generation)

-

Supabase/Postgres (session tracking)

-

n8n or LangChain (flow logic)

-

Alternative LLMs (Claude, Gemini, etc. via OpenRouter)

💡 Stop guessing which prompt is “best.”

Use this AI-powered testing framework to get real answers with real users — in real-time.

🚀 Try it now — Test smarter. Optimize faster. Deploy better.

Project Link - https://preview--ai-prompt-brew.lovable.app/