Description

Build your own intelligent chatbot that remembers context, pulls knowledge from documents, and gives accurate, human-like responses — all powered by RAG (Retrieval-Augmented Generation) and integrated with Pinecone + PostgreSQL.

Whether you're creating a customer support bot, research assistant, or internal helpdesk, this n8n workflow gives you full control over memory, retrieval, and intelligence.

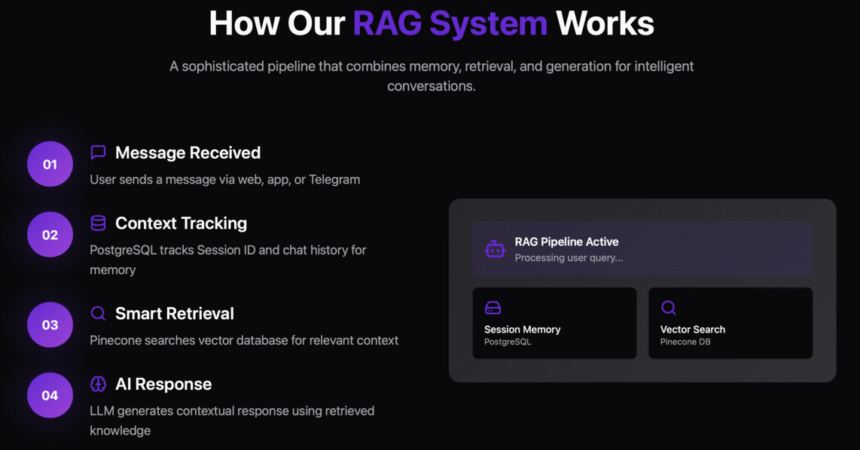

🔹 How It Works:

✅ User sends a message (via web, app, or Telegram)

✅ Postgres tracks and updates Session ID and chat history

✅ The message is passed to a Retriever Agent

✅ Pinecone searches the vector database for relevant context chunks

✅ Retrieved context + user query is sent to an LLM (e.g., GPT-4o)

✅ The final AI response is generated and sent back to the user

It Automates:

🗂️ Session-based Context Management using PostgreSQL

🧠 Semantic Search using Pinecone Vector DB

📚 Knowledge Retrieval from indexed documents

💬 LLM-Generated Responses via OpenAI or any LLM

🔁 Memory-aware Interactions with thread/session tracking

💡 Why Choose This Workflow?

🔍 Accurate Retrieval – Your chatbot finds real context, not just guesses

🧠 Contextual Memory – Understands prior questions in a conversation

📚 Document-Aware Chat – Connect your own PDFs, Notion pages, wikis, etc.

🛠️ Modular & Scalable – Swap out Pinecone/Postgres for other services

🤖 Customizable Bot Logic – Easily modify prompts, fallback behavior, and logging

👤 Who Is This For?

✔️ Founders building customer-facing bots

✔️ Internal teams managing document Q&A tools

✔️ Product builders creating AI chat assistants

✔️ Researchers enabling smart search

✔️ Educators building tutoring or FAQ bots

🔗 Consult for Integrations:

🔗 Pinecone Vector DB – For storing and searching embedded chunks

🔗 PostgreSQL – For session management and memory persistence

🔗 OpenAI / GPT-4o – For smart, context-aware responses

🔗 n8n HTTP/Database Nodes – For building the chat + memory pipeline

🚀 Get Started Today!

Scale your bot from simple Q&A to truly intelligent, memory-powered conversations.

Visit Main Gignaati Website

Visit Main Gignaati Website Learn with Gignaati Academy

Learn with Gignaati Academy Explore Workbench

Explore Workbench Partner with Us

Partner with Us Invisible Enterprises – Buy on Amazon

Invisible Enterprises – Buy on Amazon Terms & Conditions

Terms & Conditions Privacy Policy

Privacy Policy