Description

Run A/B tests on your AI agent’s prompts in production with this smart, database-driven workflow. Randomly assign chat sessions to either a baseline or experimental prompt, track results, and compare outcomes—all from within n8n.

Perfect for product teams, prompt engineers, or researchers optimizing LLM responses for quality, engagement, or conversions.

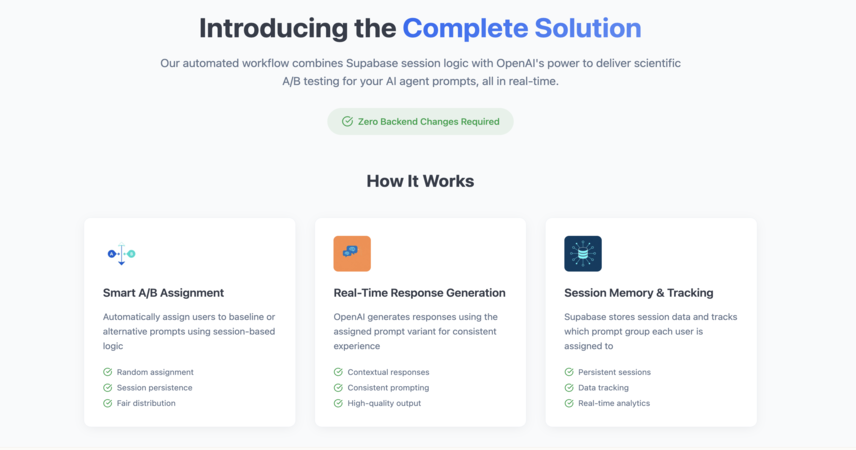

🧠 How It Works:

✅ ✉️ New message arrives in chat ✅ 🔍 Check if the session ID already exists in Supabase ✅ 🎲 If new, randomly assign a prompt (baseline or alternative) ✅ 🗃️ Store session ID and assigned prompt in Supabase ✅ 💬 Generate response using assigned prompt via OpenAI (or compatible model) ✅ 📊 Track performance and compare results across sessions

🔍 It Automates:

✅ Random assignment of new chat sessions to control/test prompts ✅ Consistent prompt use throughout a session ✅ Database-backed session tracking (no cookies or external state needed) ✅ Structured prompt experimentation within your live agent pipeline ✅ Simple scaling to multiple variants (A/B/C...) with minimal changes

💡 Why Choose This Workflow:

✅ Run prompt experiments without writing backend code ✅ Persistently associate sessions with prompt variants ✅ Compare model behavior with subtle prompt changes ✅ Improve your AI agent iteratively, based on real-world usage ✅ Easy to expand for logging, metrics collection, or user feedback

👤 Who Is This For:

✅ AI teams optimizing prompt design ✅ UX researchers testing conversational tone or style ✅ Product managers experimenting with feature wording ✅ Developers comparing OpenAI parameters like temperature or system prompts ✅ Educators or researchers running controlled LLM experiments

🔗 Integrations:

✅ Supabase (stores session-prompt assignments) ✅ OpenAI / Anthropic / Ollama (handles LLM responses) ✅ n8n Chat UI (for internal testing or embedded chat) ✅ Optional logging or analytics tools (PostHog, Segment, etc.)

🧪 Run Clean Prompt Experiments — No Guesswork With persistent A/B prompt testing inside your workflow, you can stop guessing and start optimizing what your AI says, how it says it, and what performs best.

Link : [https://lovable.dev/projects/7026e079-73eb-43a6-bf3b-b256d6b9c271]

Visit Main Gignaati Website

Visit Main Gignaati Website Learn with Gignaati Academy

Learn with Gignaati Academy Explore Workbench

Explore Workbench Partner with Us

Partner with Us Invisible Enterprises – Buy on Amazon

Invisible Enterprises – Buy on Amazon Terms & Conditions

Terms & Conditions Privacy Policy

Privacy Policy