Description

Build a smart crawler with agentic capabilities that extracts structured data from any website — all powered via n8n, OpenAI, and Supabase.

Perfect for anyone needing targeted info (like social links, contact pages, etc.) across websites without manual digging.

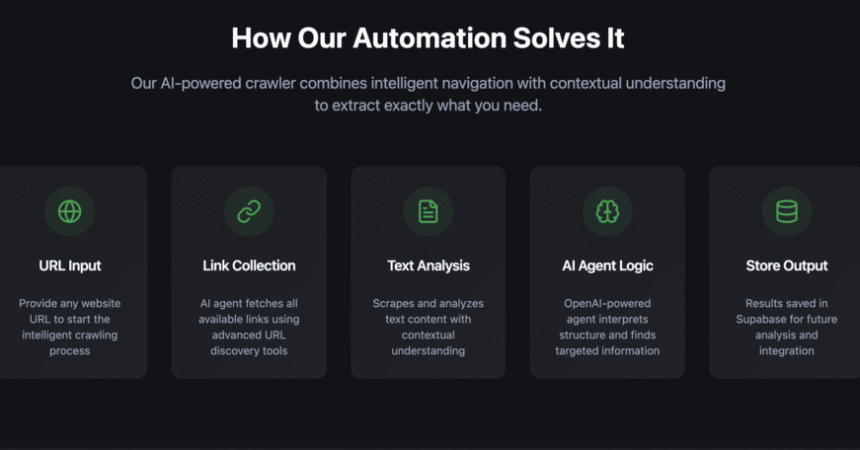

🔹 How It Works:

✅ 🌍 Input URL – Provide any website

✅ 🔗 Link Collection – The AI agent fetches all available links using the URLs Tool

✅ 📄 Text Analysis – It then scrapes and analyzes text content using the Text Tool

✅ 🧠 AI Agent Logic – The OpenAI-powered agent interprets the structure and finds the info you need (e.g., social links, emails, contact details)

✅ 📦 Store Output – Results are saved in Supabase (or your preferred DB) for later use

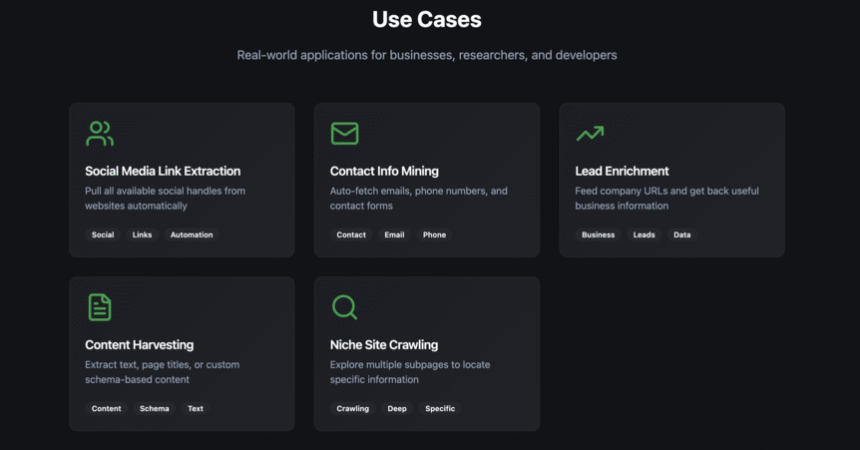

💼 Use Cases:

🔗 Social Media Link Extraction – Pull all available social handles from websites

📬 Contact Info Mining – Auto-fetch emails, phone numbers, and contact forms

🌐 Lead Enrichment – Feed company URLs and get back useful business info

📰 Content Harvesting – Extract text, page titles, or custom schema-based content

🔍 Niche Site Crawling – Explore multiple subpages to locate specific information

🧠 Why It’s Smart:

⚙️ Agentic Navigation – The AI crawls subpages like a human

🛠️ Modular Tools – Customize schema, prompt, and tools to extract what you need

🔄 Self-Looping Logic – It can iterate across linked pages autonomously

🧠 LLM Reasoning – Understands context to find relevant data, not just keywords

🧰 Works with Supabase – Easy to extend into full-stack workflows with SQL or RPC triggers

🔧 Level of Effort:

🟡 Moderate – Basic setup takes ~15–20 minutes, advanced config (like custom JSON schema) adds depth

🧩 You’ll Need:

🔐 OpenAI API Key (for the agent’s reasoning and schema-based extraction)

🗂️ Supabase Project (to store input URLs + extracted results)

🧠 Prompt + JSON Schema (tell the agent what data to return)

🌍 Website URLs (manually or from a trigger-based source like Airtable, Notion, CSV)

💡 Customization Tip:

Want to extract contact info and blog post metadata? Duplicate the crawler and tweak its schema. You can even use the outputs to auto-populate CRMs, send emails, or track site changes via alerts.

⚡ Give Your Bots a Brain — Crawl Smarter, Not Harder

From static pages to structured insights — let autonomous AI agents crawl the web for you.

Visit Main Gignaati Website

Visit Main Gignaati Website Learn with Gignaati Academy

Learn with Gignaati Academy Explore Workbench

Explore Workbench Partner with Us

Partner with Us Invisible Enterprises – Buy on Amazon

Invisible Enterprises – Buy on Amazon Terms & Conditions

Terms & Conditions Privacy Policy

Privacy Policy